Information overload can be really daunting. Endless blog posts, reports, and articles vie for our attention, making it nearly impossible to consume all the content we need. What if you could instantly distill massive texts from specific URL into concise, actionable insights? This is powerful reality you can create yourself. Join me as I pull back the curtain on my exciting journey to build an AI blog summarizer with Python, detailing every steps that transformed a seemingly complex idea into a fully functional, intelligent tool you can run on your PC. Ready to harness the power of Python and artificial intelligence to revolutionise your content repurposing, consumption and creation? Let’s get to it.

To start this project, you need to download and install the python compiler and a code editor VScode.

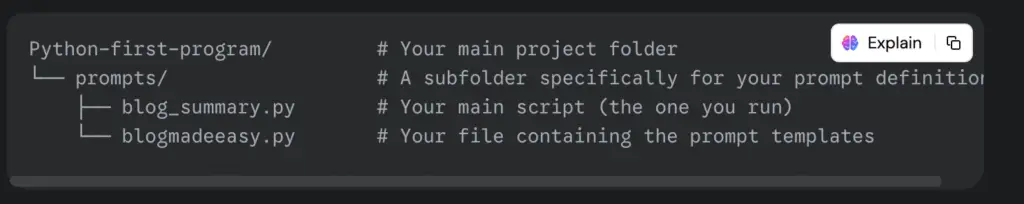

Now, let’s see the structure of the tool or script pictorially below.

As in the above create your main project folder (Python-first-program), then create a sub-folder (prompts), create two files, one containing your script and the other containing your prompt template.

The Big Picture: What Our Code Does

At a high level, this Python program does the following in the Build an AI blog Summarizer with Python script:

Fetches Content: It goes to a specific URL (a web address) and grabs the main text content of a blog post, ignoring all the ads, menus, and other clutter. Summarizes Content: It then sends this cleaned-up blog post text, along with a specific instruction (a “prompt”), to an Artificial Intelligence (AI) model provided by OpenAI. Displays Summary: The AI processes the request and returns a summary, which the program then shows to you. Flexible: It lets you choose different types of summaries (short, medium, long, SEO-optimized) and keeps running so you can summarize multiple articles or try different summary types for the same article.

Copy and paste the codes below in your script file.

Script File code

import openai

import requests

from bs4 import BeautifulSoup

from readability.readability import Document # Correct readability import

# --- Configuration ---

# Set your OpenAI API key

# Important: For production, store this securely (e.g., environment variable, secrets manager)

openai.api_key = "Add your API key here"

# Direct import from blogmadeeasy.py since it's in the same directory (assuming blog_summary.py is in 'prompts' folder)

try:

from blogmadeeasy import short_summary_prompt, medium_summary_prompt, long_summary_prompt, seo_summary_prompt

except ImportError as e:

print(

f"Error: Could not import prompts from blogmadeeasy.py. Please ensure the file exists and contains the necessary prompt variables. Details: {e}")

# Fallback prompts if import fails (less ideal, but prevents script crash)

short_summary_prompt = "Summarize: {blog_content}"

medium_summary_prompt = "Summarize in detail: {blog_content}"

long_summary_prompt = "Provide a comprehensive summary: {blog_content}"

seo_summary_prompt = "Create an SEO-friendly summary: {blog_content}"

def fetch_blog_content(url):

"""

Fetches the main textual content from a given URL using requests and readability.

Includes a fallback if readability struggles.

"""

try:

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3'

}

response = requests.get(url, headers=headers, timeout=15)

response.raise_for_status()

# --- FIX APPLIED HERE ---

html_content = response.text # Use .text to get decoded string content

# --- END FIX ---

# Attempt to extract main article content using readability

doc = Document(html_content)

article_html = doc.summary()

if article_html:

soup = BeautifulSoup(article_html, 'html.parser')

text_content = soup.get_text(separator='\n', strip=True)

if len(text_content) > 100:

return text_content

else:

print(

"Warning: Readability extracted very little content. Attempting fallback.")

print("Attempting fallback content extraction...")

soup = BeautifulSoup(html_content, 'html.parser')

content_selectors = [

'article', 'main', 'div.entry-content', 'div.post-content',

'div[class*="content"]', 'div[id*="content"]'

]

main_text_parts = []

for selector in content_selectors:

elements = soup.select(selector)

for elem in elements:

text = elem.get_text(separator='\n', strip=True)

if len(text) > 200:

main_text_parts.append(text)

if main_text_parts:

full_text = '\n\n'.join(main_text_parts)

if len(full_text) > 100:

return full_text

print("Could not reliably extract main content using either method.")

return None

except requests.exceptions.RequestException as e:

print(f"Network or request error: {e}")

return None

except Exception as e:

print(f"An unexpected error occurred during content extraction: {e}")

return None

def generate_summary(blog_content, summary_type, model="gpt-4"):

"""

Generates a summarized version of the blog post content using OpenAI.

"""

if not blog_content:

return "Error: No blog content provided for summarization."

prompt_template = ""

max_tokens_limit = 0

if summary_type == "short":

prompt_template = short_summary_prompt

max_tokens_limit = 100

elif summary_type == "medium":

prompt_template = medium_summary_prompt

max_tokens_limit = 250

elif summary_type == "long":

prompt_template = long_summary_prompt

max_tokens_limit = 400

elif summary_type == "seo":

prompt_template = seo_summary_prompt

max_tokens_limit = 120

else:

return "Error: Invalid summary type specified. Choose from 'short', 'medium', 'long', or 'seo'."

prompt = prompt_template.format(blog_content=blog_content)

if len(prompt) > 12000:

print("Warning: Blog content is very long. Truncating for API call to prevent exceeding token limit.")

prompt = prompt[:12000] + "\n\n... (content truncated due to length)"

try:

response = openai.chat.completions.create(

model=model,

messages=[{"role": "user", "content": prompt}],

max_tokens=max_tokens_limit,

temperature=0.7

)

return response.choices[0].message.content.strip()

except openai.APIError as e:

print(f"OpenAI API Error: {e}")

return f"Error communicating with OpenAI: {e}"

except Exception as e:

return f"An unexpected error occurred during summary generation: {e}"

if __name__ == "__main__":

print("--- Blog Post Summarizer ---")

sample_url = 'https://j-insights.com/mac-connected-to-wi-fi-but-no-internet/'

blog_url = input(

f"Enter the URL of the blog post (or press Enter for sample '{sample_url}'): ").strip()

if not blog_url:

blog_url = sample_url

print(f"Using sample URL: {blog_url}")

print("\nFetching blog content...")

blog_content = fetch_blog_content(blog_url)

if blog_content:

print(f"\n--- Content fetched ({len(blog_content)} characters) ---")

print("Snippet:\n", blog_content[:700] +

"..." if len(blog_content) > 700 else blog_content)

print("\n----------------------------------")

while True:

summary_type = input(

"\nChoose summary type ('short', 'medium', 'long', 'seo', or 'exit' to quit): ").lower().strip()

if summary_type == 'exit':

print("Exiting summarizer.")

break

if summary_type in ["short", "medium", "long", "seo"]:

print(f"Generating {summary_type.upper()} summary...")

generated_summary = generate_summary(

blog_content, summary_type)

print(f"\n--- Generated {summary_type.upper()} Summary ---\n")

print(generated_summary)

print("\n----------------------------------")

else:

print(

"Invalid summary type. Please choose from 'short', 'medium', 'long', 'seo', or 'exit'.")

else:

print("\nFailed to retrieve blog content from the provided URL. Please check the URL and your internet connection.")

This actually the main code for the Build an AI blog Summarizer with Python project. Now let’s explain each part of this code:

Importing necessary Libraries

To Build an AI blog Summarizer with Python we have to import the necessary libraries needed to communicate with our supper intelligent AI model and run our script.

import openai

import requests

from bs4 import BeautifulSoup

from readability.readability import Document # Correct readability import

import openai: This line tells Python that our script will use the openai library. This library provides the tools to communicate with OpenAI’s powerful AI models (like GPT-4).

import requests: This line imports the requests library. This library is fantastic for making web requests. We use it to download the raw content of a web page from a given URL. Think of it as a specialized web browser that fetches pages just for your program.

from bs4 import BeautifulSoup: This imports the BeautifulSoup class from the bs4 library. BeautifulSoup is a powerful tool for parsing HTML and XML documents. Web pages are written in HTML, and BeautifulSoup helps us navigate through that HTML to find specific pieces of information or extract clean text. It’s like having a specialized pair of scissors and a map to cut out exactly what you need from a messy newspaper.

from readability.readability import Document: This line imports the Document class from the readability library. This library is designed to extract the main, human-readable content from a web page, stripping away boilerplate like navigation, ads, and footers. It’s excellent for getting just the article text.

Defining API Key

# --- Configuration ---

openai.api_key = "sk-proj-YOUR_API_KEY_HERE" # Replace with your actual key

openai.api_key = "...": This line sets your secret key for accessing the OpenAI API. Every time your script asks the AI model to do something, it sends this key along so OpenAI knows it’s you and can charge your account (or use your free credits). It’s crucial to keep this key private!

Prompt import and error handling

This line imports the prompt and handle errors through the try except block if import fails.

# Direct import from blogmadeeasy.py since it's in the same directory

try:

from blogmadeeasy import short_summary_prompt, medium_summary_prompt, long_summary_prompt, seo_summary_prompt

except ImportError as e:

print(f"Error: Could not import prompts from blogmadeeasy.py. Please ensure the file exists and contains the necessary prompt variables. Details: {e}")

# Fallback prompts if import fails (less ideal, but prevents script crash)

short_summary_prompt = "Summarize: {blog_content}"

medium_summary_prompt = "Summarize in detail: {blog_content}"

long_summary_prompt = "Provide a comprehensive summary: {blog_content}"

seo_summary_prompt = "Create an SEO-friendly summary: {blog_content}"

Fetch content from url

def fetch_blog_content(url):

"""Fetches the main textual content of a blog post from a given URL."""

try:

headers = { /* ... user-agent ... */ }

response = requests.get(url, headers=headers, timeout=15)

response.raise_for_status() # Check for HTTP errors (like 404 Not Found)

html_content = response.text # <--- THE CRUCIAL FIX HERE!

doc = Document(html_content) # Pass the string content to readability

article_html = doc.summary() # readability extracts the main article HTML

if article_html:

soup = BeautifulSoup(article_html, 'html.parser')

text_content = soup.get_text(separator='\n', strip=True)

if len(text_content) > 100:

return text_content

# ... fallback if readability fails or extracts little content ...

except requests.exceptions.RequestException as e:

print(f"Network or request error: {e}")

return None

except Exception as e:

print(f"An unexpected error occurred during content extraction: {e}")

return None

This fetches contents from a url and the rest part of the code generate the summary and that is it.

Prompt file code

# prompts/blogmadeeasy.py

# Prompt for a concise summary suitable for social media (e.g., Twitter, Facebook status)

short_summary_prompt = """

Summarize the following blog post in 1-2 concise sentences, focusing on the core message or most actionable tip. Make it engaging for a social media audience:

{blog_content}

"""

# Prompt for a medium-length summary suitable for platforms like Medium or a newsletter snippet

medium_summary_prompt = """

Create a 2-3 paragraph summary of the following blog post. Highlight the main points, key takeaways, and why the information is valuable to the reader. Aim for an informative yet digestible overview for a platform like Medium:

{blog_content}

"""

# Prompt for a detailed summary suitable for LinkedIn or as a more in-depth internal memo

long_summary_prompt = """

Provide a comprehensive summary of the following blog post, ensuring all essential information, major arguments, and concluding thoughts are captured. This summary should be detailed enough to stand alone as a repurposable article for platforms like LinkedIn, offering a thorough understanding without needing to read the original:

{blog_content}

"""

# Prompt for an SEO-optimized summary (e.g., for meta descriptions or blog post excerpts)

seo_summary_prompt = """

Generate a compelling and search engine optimized summary (meta description) for the following blog post, ideally under 160 characters. Include relevant keywords from the text naturally. The summary should entice clicks and accurately represent the article's content:

{blog_content}

"""

This file contains the different summary types; the long, medium, short, seo optimized. Any of the summary prompt you picked will determine what kind of summary rendered to you along the loop. So this is summarily how you can easily Build an AI blog Summarizer with Python in minutes. If you face any issue pls submit a question in our forum or ping me on support@j-insights.com